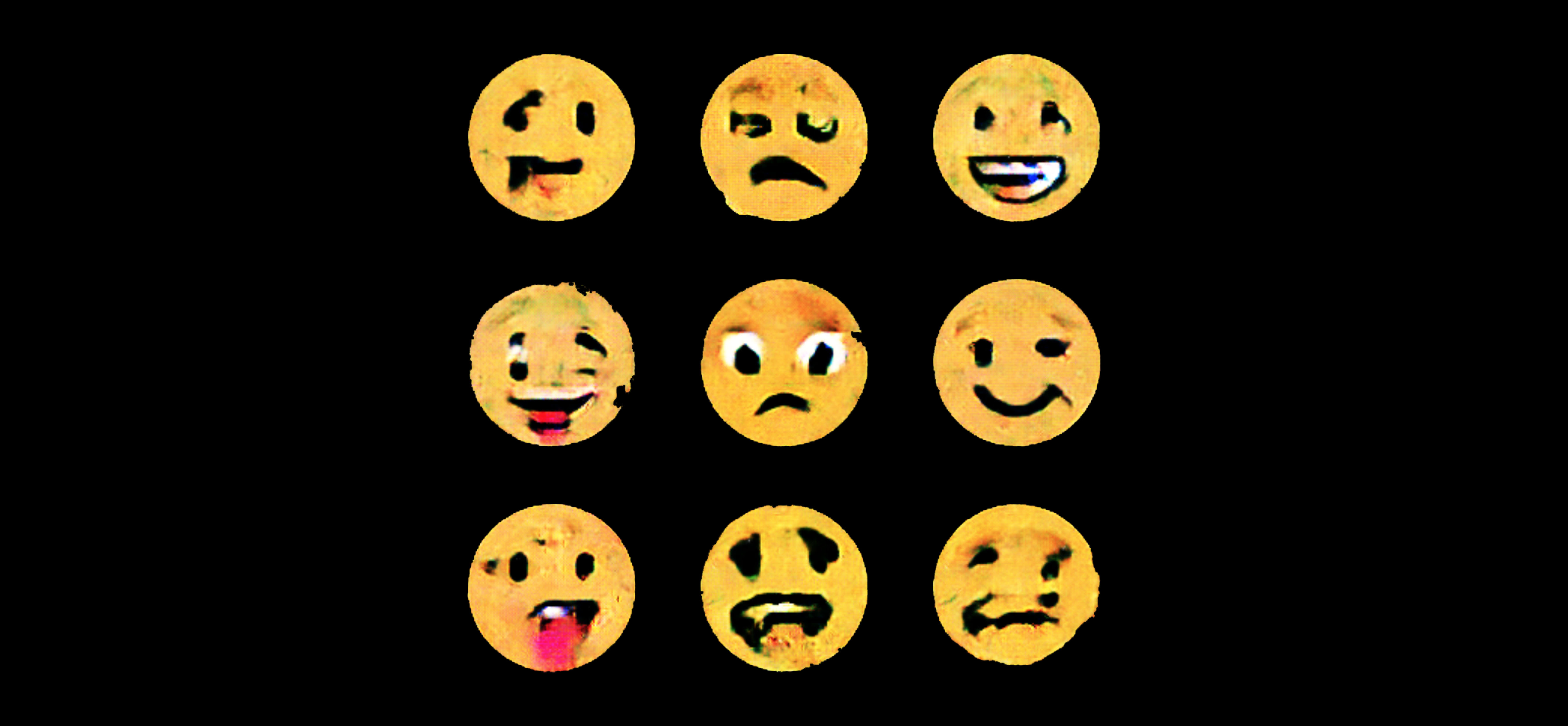

What happens when you train an AI system to create Emoji?

Using a Deep Convolutional Generative Adversarial Network (DCGAN) and a dataset consisting of 3145 individual, commonly-used Emoji as input we trained our model for 25 epochs to come up with new ones.

The resulting faces and their expressions range from expected happy/sad/angry and weird-looking to flabbergastingly horrifying ones.

Make sure to check out our instagram page for more upcoming results and variations.

In-between/artificial emotions

The animations show the training process and the transitional morphing between shapes with every frame being a newly-created Emoji.

Further down is a video and a little interactive map to explore the training process and output: from vague, noisy blur to concrete forms and more distinguished shapes and faces.

Trying to make (some) sense of the results

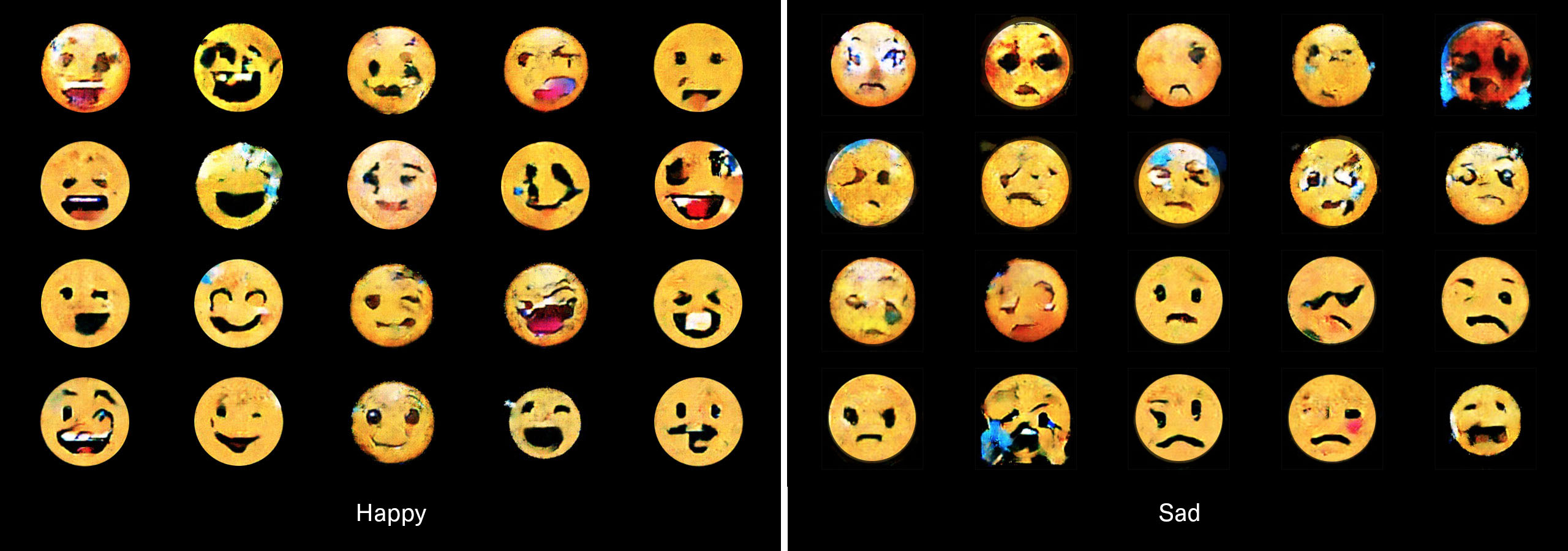

We tried to categorize some of the results. Most generated Emoji are a mixture of weird/new-looking expressions, but here are examples labeled with Neutral, Horror, Happy and Sad.

To be fair, it’s pretty difficult to draw the line since there’s a visual twist to nearly all of them. After all, this ambiguity is what makes the results so appealing to us.

The complete training process

Here are some samples from our output. Note the evolution in the beginning, from noisy nonsense to face-like structures. The video shows the complete training process: 25 epochs of training, which took 14 hours on a laptop without GPU acceleration. The video contains every 8th generated image, for a total of 10,040 frames.

Interactive AImoji Map

The following map shows the first 10,000 images from the training process—100 per row.

- Hover over the map to see bigger emoji.

- Press and hold the left mouse button to zoom in a bit.

- Double click to view the map full-screen. (Hotkey: F)

- Right click to save an emoji. (Hotkey: S)

This work is part of the graphic concept and communication design for the exhibition “UNCANNY VALUES. Artificial Intelligence & You”, a project of the MAK Vienna in the context of Vienna Biennale for Change 2019: Brave New Virtues. Shaping Our Digital World (29 May – 6 October 2019)

Read more about this project here.

From the VIENNA BIENNALE 2019 brochure:

The Viennese design duo Process Studio has developed the exhibition’s visual, multimedia imparting of knowledge as well as the communication of UNCANNY VALUES in public spaces. As computer scientists, they worked their way into the technical foundations of neural networks and, with the help of a so-called Generative Adversarial Network (GAN), developed especially for the VIENNA BIENNALE 2019 a unique form of communication that truly never existed before. GANs are groups of algorithms for unsupervised learning. They are currently used, for example, to produce photorealistic pictures of people who never existed or deceptively real sounding, machine-generated texts that can be used for fake news. The implications and risks of such a technology are correspondingly wide-ranging.

Process Studio have confronted their GAN with a very special reality and language: all of the emoji currently in existence. As an international, universal visual language that is first and foremost about expressing emotions, emoji are uncannily suited to such a process. We can watch the GAN learning, trying to interpret what the usually yellow and round entities that make up a world actually are. Furthermore, we can watch it as it reacts to it, what supposed emotions it derives from it and creates anew. This results in thousands of AImoji, which emerge slowly and uncannily from behind white noise and begin to communicate with us. We do not yet know what they want to say to us but the conversation has begun. Some may seem monstrous to us, and it is unclear what emotions they are trying to express. What begins to become clear, however, is that when confronted with them we have to rethink and adjust our habits of reading the world.

Together with the AIfont, the AImoji is the Key Visual of the exhibition UNCANNY VALUES. A generative artwork and a process—rather than a single still frame. Read the case study about the whole identity here.